Template Embeddings

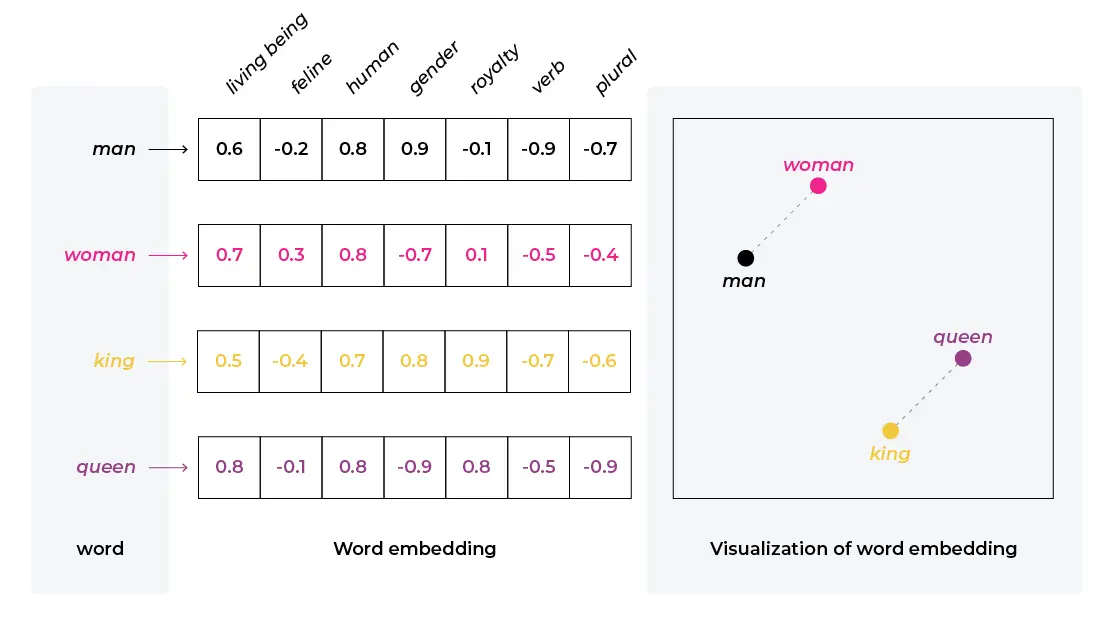

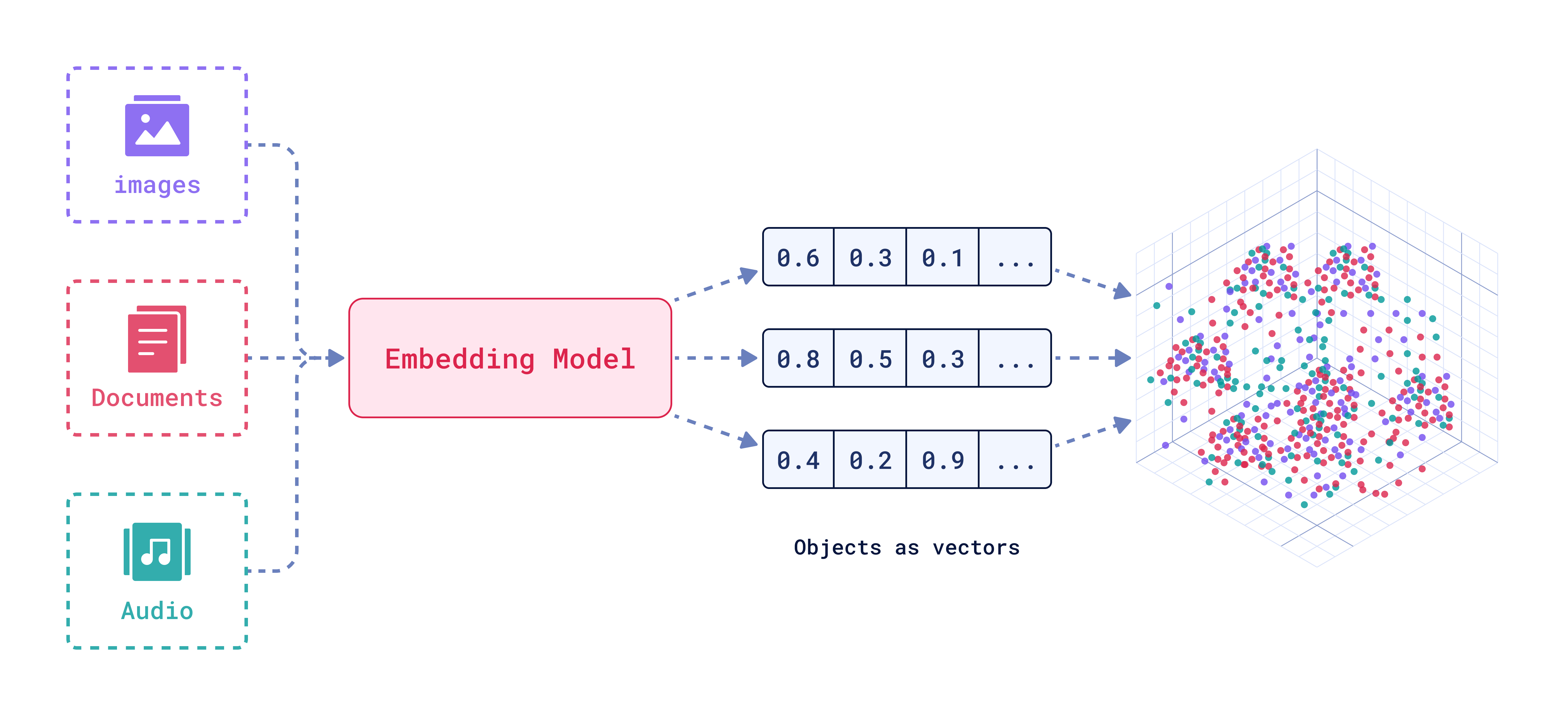

Template Embeddings - Benefit from using the latest features and best practices from microsoft azure ai, with popular. The input_map maps document fields to model inputs. In this article, we'll define what embeddings actually are, how they function within openai’s models, and how they relate to prompt engineering. Learn about our visual embedding templates. See files in directory textual_inversion_templates for what you can do with those. Embeddings capture the meaning of data in a way that enables semantic similarity comparisons between items, such as text or images. Create an ingest pipeline to generate vector embeddings from text fields during document indexing. These embeddings capture the semantic meaning of the text and can be used. We will create a small frequently asked questions (faqs) engine:. The titan multimodal embeddings g1 model translates text inputs (words, phrases or possibly large units of text) into numerical. Embeddings is a process of converting text into numbers. We will create a small frequently asked questions (faqs) engine:. The embeddings object will be used to convert text into numerical embeddings. Embedding models are available in ollama, making it easy to generate vector embeddings for use in search and retrieval augmented generation (rag) applications. Text file with prompts, one per line, for training the model on. The titan multimodal embeddings g1 model translates text inputs (words, phrases or possibly large units of text) into numerical. Learn more about the underlying models that power. Embeddings are used to generate a representation of unstructured data in a dense vector space. There are myriad commercial and open embedding models available today, so as part of our generative ai series, today we'll showcase a colab template you can use to compare different. Create an ingest pipeline to generate vector embeddings from text fields during document indexing. This application would leverage the key features of the embeddings template: Embeddings are used to generate a representation of unstructured data in a dense vector space. Benefit from using the latest features and best practices from microsoft azure ai, with popular. These embeddings capture the semantic meaning of the text and can be used. The embeddings represent the meaning of. Convolution blocks serve as local feature extractors and are the key to success of the neural networks. To make local semantic feature embedding rather explicit, we reformulate. These embeddings capture the semantic meaning of the text and can be used. Embeddings is a process of converting text into numbers. We will create a small frequently asked questions (faqs) engine:. This property can be useful to map relationships such as similarity. Embeddings capture the meaning of data in a way that enables semantic similarity comparisons between items, such as text or images. a class designed to interact with. This application would leverage the key features of the embeddings template: Embedding models are available in ollama, making it easy to generate. See files in directory textual_inversion_templates for what you can do with those. Embeddings are used to generate a representation of unstructured data in a dense vector space. There are myriad commercial and open embedding models available today, so as part of our generative ai series, today we'll showcase a colab template you can use to compare different. Text file with. Embedding models are available in ollama, making it easy to generate vector embeddings for use in search and retrieval augmented generation (rag) applications. Text file with prompts, one per line, for training the model on. The input_map maps document fields to model inputs. This property can be useful to map relationships such as similarity. Create an ingest pipeline to generate. Learn more about using azure openai and embeddings to perform document search with our embeddings tutorial. We will create a small frequently asked questions (faqs) engine:. From openai import openai class embedder: The template for bigtable to vertex ai vector search files on cloud storage creates a batch pipeline that reads data from a bigtable table and writes it to. The embeddings represent the meaning of the text and can be operated on using mathematical operations. Learn more about using azure openai and embeddings to perform document search with our embeddings tutorial. This property can be useful to map relationships such as similarity. From openai import openai class embedder: In this article, we'll define what embeddings actually are, how they. This application would leverage the key features of the embeddings template: Embeddings are used to generate a representation of unstructured data in a dense vector space. Embedding models can be useful in their own right (for applications like clustering and visual search), or as an input to a machine learning model. See files in directory textual_inversion_templates for what you can. From openai import openai class embedder: Embeddings is a process of converting text into numbers. There are two titan multimodal embeddings g1 models. This application would leverage the key features of the embeddings template: These embeddings capture the semantic meaning of the text and can be used. Text file with prompts, one per line, for training the model on. See files in directory textual_inversion_templates for what you can do with those. Embedding models are available in ollama, making it easy to generate vector embeddings for use in search and retrieval augmented generation (rag) applications. The titan multimodal embeddings g1 model translates text inputs (words, phrases or possibly. See files in directory textual_inversion_templates for what you can do with those. Benefit from using the latest features and best practices from microsoft azure ai, with popular. There are two titan multimodal embeddings g1 models. Convolution blocks serve as local feature extractors and are the key to success of the neural networks. Embeddings capture the meaning of data in a way that enables semantic similarity comparisons between items, such as text or images. Embeddings are used to generate a representation of unstructured data in a dense vector space. When you type to a model in. The titan multimodal embeddings g1 model translates text inputs (words, phrases or possibly large units of text) into numerical. The input_map maps document fields to model inputs. To make local semantic feature embedding rather explicit, we reformulate. There are myriad commercial and open embedding models available today, so as part of our generative ai series, today we'll showcase a colab template you can use to compare different. Learn more about using azure openai and embeddings to perform document search with our embeddings tutorial. Create an ingest pipeline to generate vector embeddings from text fields during document indexing. From openai import openai class embedder: Embedding models are available in ollama, making it easy to generate vector embeddings for use in search and retrieval augmented generation (rag) applications. The embeddings represent the meaning of the text and can be operated on using mathematical operations.Getting Started With Embeddings Is Easier Than You Think Arize AI

TrainingWordEmbeddingsScratch/Training Word Embeddings Template

A StepbyStep Guide to Embedding Elementor Templates in WordPress

Template embedding space of both the generated and the ground truth

Word Embeddings Vector Representations Language Models PPT Template ST

What are Vector Embeddings? Revolutionize Your Search Experience Qdrant

GitHub CenterForCurriculumRedesign/cogqueryembeddingstemplate

Top Free Embedding tools, APIs, and Open Source models Eden AI

Free File Embedding Templates For Google Sheets And Microsoft Excel

Free Embedding Techniques Templates For Google Sheets And Microsoft

Embedding Models Can Be Useful In Their Own Right (For Applications Like Clustering And Visual Search), Or As An Input To A Machine Learning Model.

Learn More About The Underlying Models That Power.

The Template For Bigtable To Vertex Ai Vector Search Files On Cloud Storage Creates A Batch Pipeline That Reads Data From A Bigtable Table And Writes It To A Cloud Storage Bucket.

The Embeddings Object Will Be Used To Convert Text Into Numerical Embeddings.

Related Post: