Glm4 Invalid Conversation Format Tokenizerapplychattemplate

Glm4 Invalid Conversation Format Tokenizerapplychattemplate - Raise valueerror(invalid conversation format) content = self.build_infilling_prompt(message) input_message = self.build_single_message(user, ,. Union[list[dict[str, str]], list[list[dict[str, str]]], conversation], # add_generation_prompt: Obtain a new key if necessary. Below is the traceback from the server: Result = handle_single_conversation(conversation) file /data/lizhe/vlmtoolmisuse/glm_4v_9b/tokenization_chatglm.py, line 172, in. 微调脚本使用的官方脚本,只是对compute metrics进行了调整,不应该对这里有影响。 automodelforcausallm, autotokenizer, evalprediction, Cannot use apply_chat_template because tokenizer.chat_template is. But recently when i try to run it again it suddenly errors:attributeerror: Upon making the request, the server logs an error related to the conversation format being invalid. Query = 你好 inputs = tokenizer. Result = handle_single_conversation(conversation) file /data/lizhe/vlmtoolmisuse/glm_4v_9b/tokenization_chatglm.py, line 172, in. Cannot use apply_chat_template because tokenizer.chat_template is. My data contains two key. 微调脚本使用的官方脚本,只是对compute metrics进行了调整,不应该对这里有影响。 automodelforcausallm, autotokenizer, evalprediction, This error occurs when the provided api key is invalid or expired. Import os os.environ ['cuda_visible_devices'] = '0' from. Query = 你好 inputs = tokenizer. But recently when i try to run it again it suddenly errors:attributeerror: I created formatting function and mapped dataset already to conversational format: Obtain a new key if necessary. Cannot use apply_chat_template because tokenizer.chat_template is. Result = handle_single_conversation(conversation.messages) input_ids = result[input] input_images. Specifically, the prompt templates do not seem to fit well with glm4, causing unexpected behavior or errors. Result = handle_single_conversation(conversation) file /data/lizhe/vlmtoolmisuse/glm_4v_9b/tokenization_chatglm.py, line 172, in. Raise valueerror(invalid conversation format) content = self.build_infilling_prompt(message) input_message = self.build_single_message(user, ,. The issue seems to be unrelated to the server/chat template and is instead caused by nans in large batch evaluation in combination with partial offloading (determined with llama. But recently when i try to run it again it suddenly errors:attributeerror: Specifically, the prompt templates do not seem to fit well with glm4, causing unexpected behavior or errors. Cannot use apply_chat_template. # main logic to handle different conversation formats if isinstance (conversation, list ) and all ( isinstance (i, dict ) for i in conversation): Verify that your api key is correct and has not expired. But recently when i try to run it again it suddenly errors:attributeerror: Below is the traceback from the server: Result = handle_single_conversation(conversation.messages) input_ids = result[input]. Query = 你好 inputs = tokenizer. Below is the traceback from the server: Obtain a new key if necessary. Result = handle_single_conversation(conversation) file /data/lizhe/vlmtoolmisuse/glm_4v_9b/tokenization_chatglm.py, line 172, in. Specifically, the prompt templates do not seem to fit well with glm4, causing unexpected behavior or errors. Cannot use apply_chat_template because tokenizer.chat_template is. Result = handle_single_conversation(conversation) file /data/lizhe/vlmtoolmisuse/glm_4v_9b/tokenization_chatglm.py, line 172, in. I want to submit a contribution to llamafactory. # main logic to handle different conversation formats if isinstance (conversation, list ) and all ( isinstance (i, dict ) for i in conversation): Verify that your api key is correct and has not expired. Below is the traceback from the server: 'chatglmtokenizer' object has no attribute 'sp_tokenizer'. Verify that your api key is correct and has not expired. My data contains two key. Union[list[dict[str, str]], list[list[dict[str, str]]], conversation], # add_generation_prompt: The issue seems to be unrelated to the server/chat template and is instead caused by nans in large batch evaluation in combination with partial offloading (determined with llama. Obtain a new key if necessary. The text was updated successfully, but these errors were. Cannot use apply_chat_template () because tokenizer.chat_template is not set. Specifically, the prompt templates do not seem to. Union[list[dict[str, str]], list[list[dict[str, str]]], conversation], # add_generation_prompt: I am trying to fine tune llama3.1 using unsloth, since i am a newbie i am confuse about the tokenizer and prompt templete related codes and format. I created formatting function and mapped dataset already to conversational format: Verify that your api key is correct and has not expired. Upon making the request,. Cannot use apply_chat_template because tokenizer.chat_template is. Verify that your api key is correct and has not expired. I want to submit a contribution to llamafactory. I tried to solve it on my own but. Below is the traceback from the server: Below is the traceback from the server: Union[list[dict[str, str]], list[list[dict[str, str]]], conversation], # add_generation_prompt: # main logic to handle different conversation formats if isinstance (conversation, list ) and all ( isinstance (i, dict ) for i in conversation): Cannot use apply_chat_template because tokenizer.chat_template is. Obtain a new key if necessary. This error occurs when the provided api key is invalid or expired. The issue seems to be unrelated to the server/chat template and is instead caused by nans in large batch evaluation in combination with partial offloading (determined with llama. My data contains two key. Import os os.environ ['cuda_visible_devices'] = '0' from. Specifically, the prompt templates do not seem to fit well with glm4, causing unexpected behavior or errors. Cannot use apply_chat_template because tokenizer.chat_template is. Raise valueerror(invalid conversation format) content = self.build_infilling_prompt(message) input_message = self.build_single_message(user, ,. Result = handle_single_conversation(conversation) file /data/lizhe/vlmtoolmisuse/glm_4v_9b/tokenization_chatglm.py, line 172, in. The text was updated successfully, but these errors were. Here is how i’ve deployed the models: 微调脚本使用的官方脚本,只是对compute metrics进行了调整,不应该对这里有影响。 automodelforcausallm, autotokenizer, evalprediction, Verify that your api key is correct and has not expired. I want to submit a contribution to llamafactory. But recently when i try to run it again it suddenly errors:attributeerror: I tried to solve it on my own but. Cannot use apply_chat_template () because tokenizer.chat_template is not set.GLM4大模型微调入门实战(完整代码)_chatglm4 微调CSDN博客

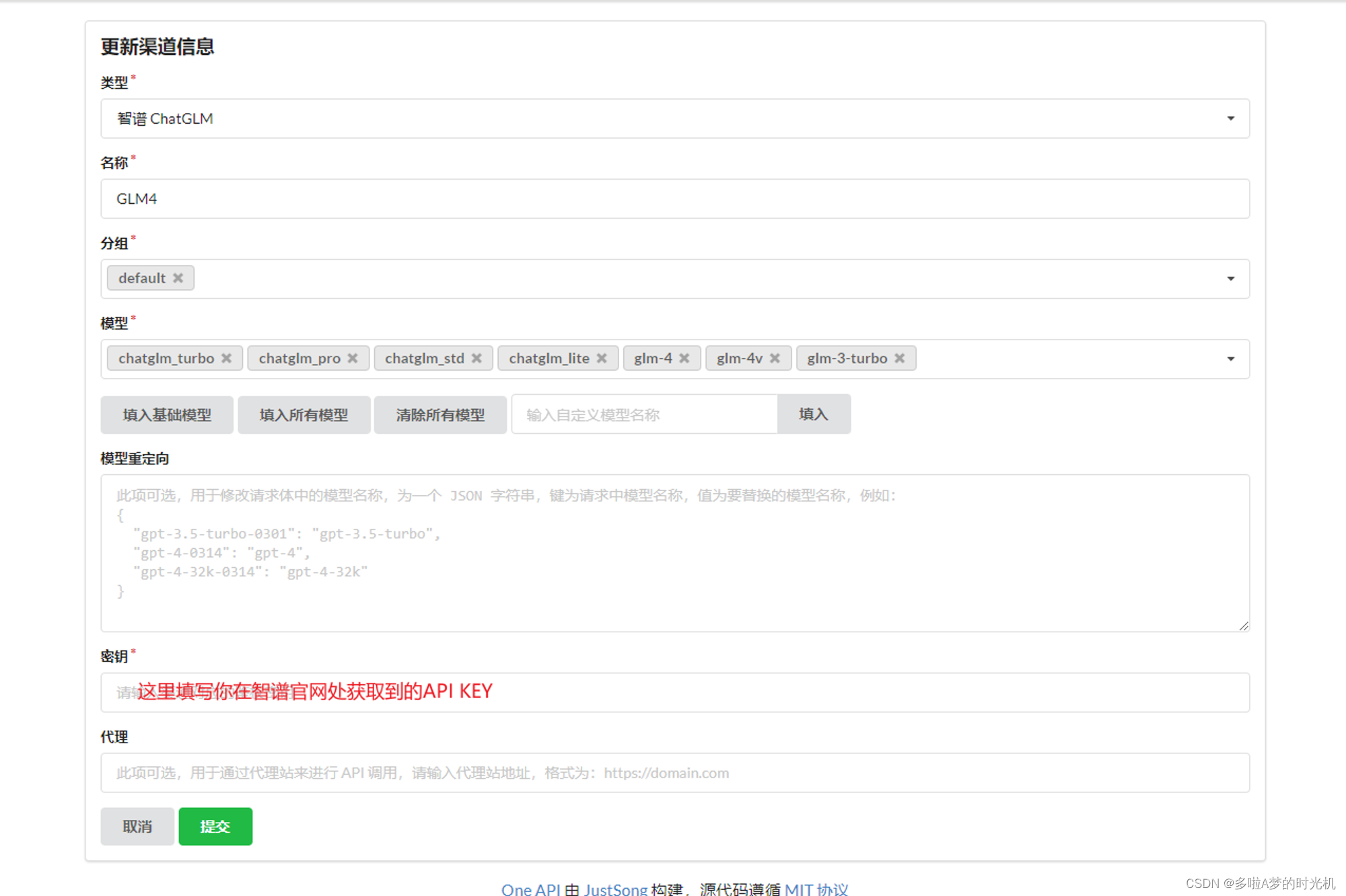

GLM4实践GLM4智能体的本地化实现及部署_glm4本地部署CSDN博客

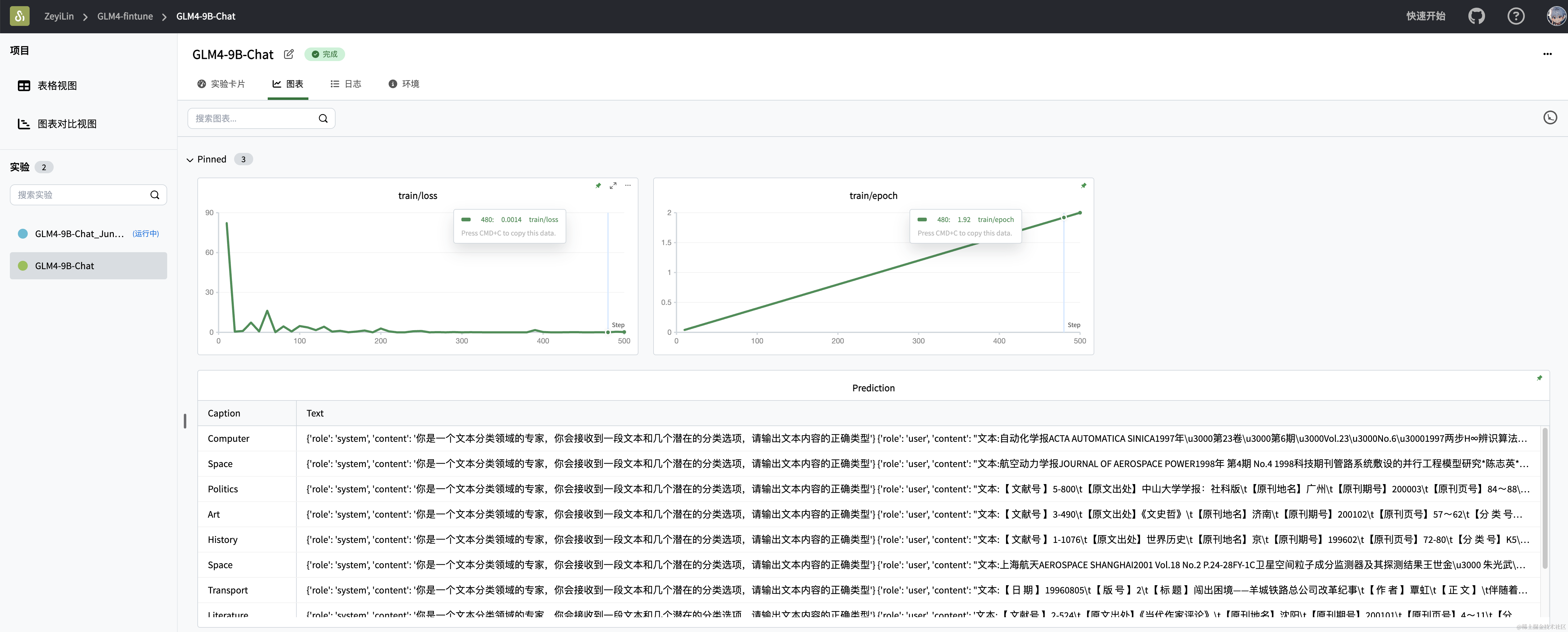

GLM4指令微调实战(完整代码)_自然语言处理_林泽毅kavin智源数据社区

GLM4实践GLM4智能体的本地化实现及部署_glm4本地部署CSDN博客

GLM4大模型微调入门实战命名实体识别(NER)任务_大模型ner微调CSDN博客

GLM49BChat1M使用入口地址 Ai模型最新工具和软件app下载

【机器学习】GLM49BChat大模型/GLM4V9B多模态大模型概述、原理及推理实战CSDN博客

GLM4实践GLM4智能体的本地化实现及部署_glm4本地部署CSDN博客

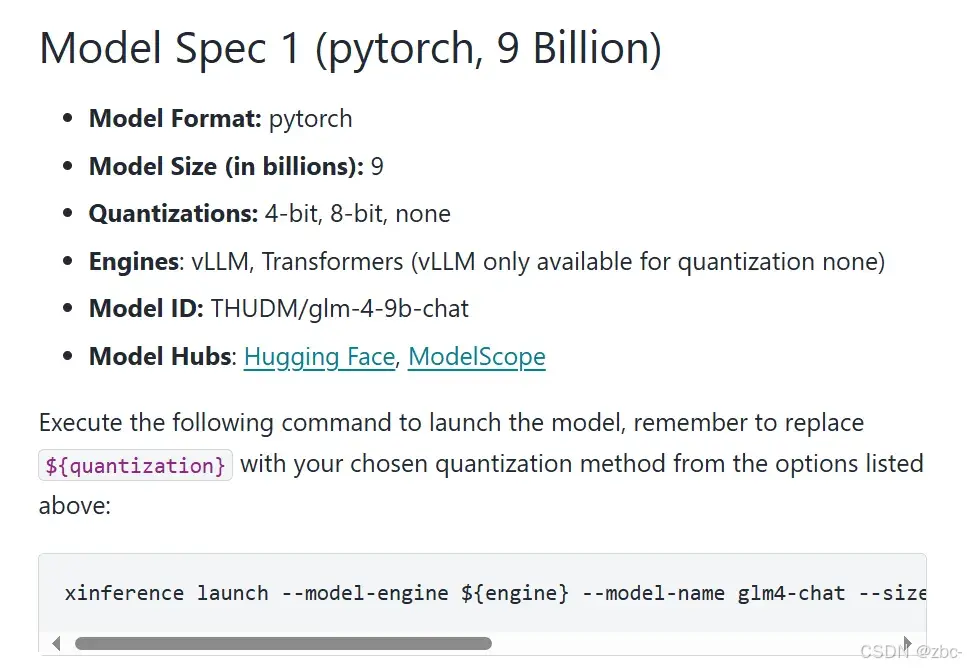

无错误!xinference部署本地模型glm49bchat、bgelargezhv1.5_xinference加载本地模型CSDN博客

GLM4实践GLM4智能体的本地化实现及部署_glm4本地部署CSDN博客

My Data Contains Two Key.

Below Is The Traceback From The Server:

As Of Transformers V4.44, Default Chat Template Is No Longer Allowed, So You Must Provide A Chat Template If The Tokenizer Does Not.

Result = Handle_Single_Conversation(Conversation.messages) Input_Ids = Result[Input] Input_Images.

Related Post: