Can Prompt Templates Reduce Hallucinations

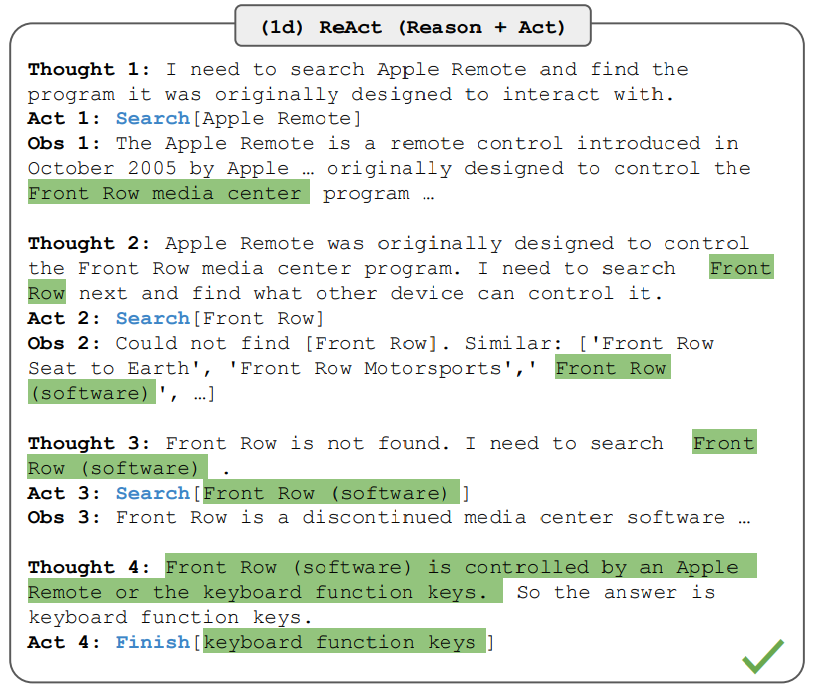

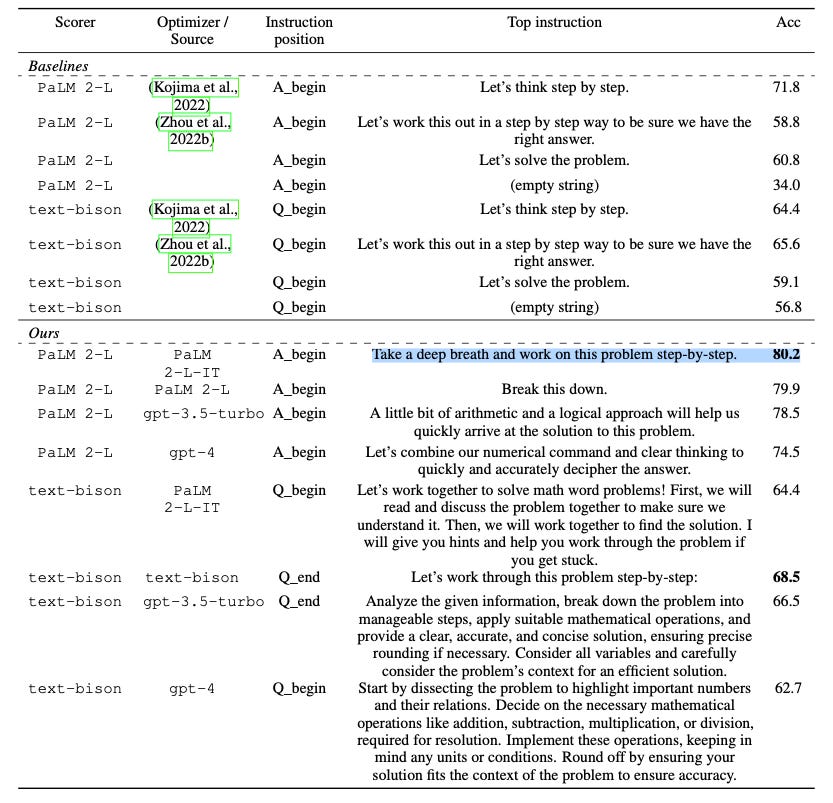

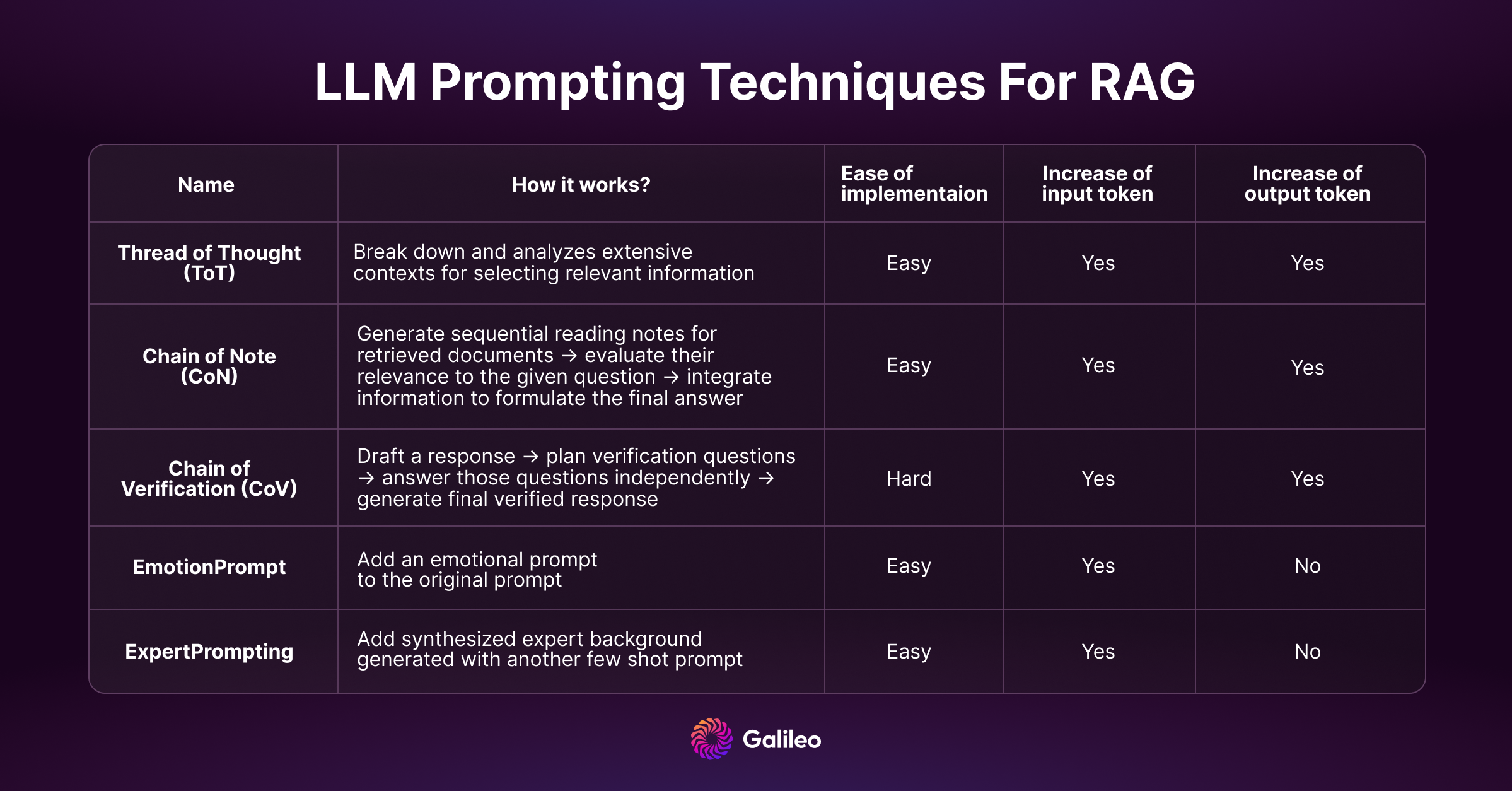

Can Prompt Templates Reduce Hallucinations - Based around the idea of grounding the model to a trusted datasource. Eliminating hallucinations entirely would imply creating an information black hole—a system where infinite information can be stored within a finite model and retrieved. Here are some examples of possible. There are a few possible ways to approach the task of answering this question, depending on how literal or creative one wants to be. This article delves into six prompting techniques that can help reduce ai hallucination,. Fortunately, there are techniques you can use to get more reliable output from an ai model. Here are three templates you can use on the prompt level to reduce them. Provide clear and specific prompts. They work by guiding the ai’s reasoning. To harness the potential of ai effectively, it is crucial to mitigate hallucinations. Fortunately, there are techniques you can use to get more reliable output from an ai model. They work by guiding the ai’s reasoning. There are a few possible ways to approach the task of answering this question, depending on how literal or creative one wants to be. They work by guiding the ai’s reasoning process, ensuring that outputs are accurate, logically consistent, and grounded in reliable. To harness the potential of ai effectively, it is crucial to mitigate hallucinations. Here are three templates you can use on the prompt level to reduce them. Eliminating hallucinations entirely would imply creating an information black hole—a system where infinite information can be stored within a finite model and retrieved. Explore emotional prompts and expertprompting to. Dive into our blog for advanced strategies like thot, con, and cove to minimize hallucinations in rag applications. Mastering prompt engineering translates to businesses being able to fully harness ai’s capabilities, reaping the benefits of its vast knowledge while sidestepping the pitfalls of. Dive into our blog for advanced strategies like thot, con, and cove to minimize hallucinations in rag applications. Explore emotional prompts and expertprompting to. Here are three templates you can use on the prompt level to reduce them. Provide clear and specific prompts. Here are three templates you can use on the prompt level to reduce them. Eliminating hallucinations entirely would imply creating an information black hole—a system where infinite information can be stored within a finite model and retrieved. “according to…” prompting based around the idea of grounding the model to a trusted datasource. Based around the idea of grounding the model to a trusted datasource. To harness the potential of ai effectively, it is crucial. They work by guiding the ai’s reasoning. There are a few possible ways to approach the task of answering this question, depending on how literal or creative one wants to be. Here are some examples of possible. This article delves into six prompting techniques that can help reduce ai hallucination,. To harness the potential of ai effectively, it is crucial. When researchers tested the method they. Eliminating hallucinations entirely would imply creating an information black hole—a system where infinite information can be stored within a finite model and retrieved. Here are some examples of possible. They work by guiding the ai’s reasoning process, ensuring that outputs are accurate, logically consistent, and grounded in reliable. There are a few possible ways. Mastering prompt engineering translates to businesses being able to fully harness ai’s capabilities, reaping the benefits of its vast knowledge while sidestepping the pitfalls of. Based around the idea of grounding the model to a trusted datasource. When researchers tested the method they. This article delves into six prompting techniques that can help reduce ai hallucination,. They work by guiding. Explore emotional prompts and expertprompting to. They work by guiding the ai’s reasoning. When researchers tested the method they. Here are some examples of possible. Here are three templates you can use on the prompt level to reduce them. To harness the potential of ai effectively, it is crucial to mitigate hallucinations. Fortunately, there are techniques you can use to get more reliable output from an ai model. When researchers tested the method they. The first step in minimizing ai hallucination is. Mastering prompt engineering translates to businesses being able to fully harness ai’s capabilities, reaping the benefits of. Dive into our blog for advanced strategies like thot, con, and cove to minimize hallucinations in rag applications. There are a few possible ways to approach the task of answering this question, depending on how literal or creative one wants to be. They work by guiding the ai’s reasoning process, ensuring that outputs are accurate, logically consistent, and grounded in. Here are some examples of possible. Here are three templates you can use on the prompt level to reduce them. Explore emotional prompts and expertprompting to. Based around the idea of grounding the model to a trusted datasource. “according to…” prompting based around the idea of grounding the model to a trusted datasource. Mastering prompt engineering translates to businesses being able to fully harness ai’s capabilities, reaping the benefits of its vast knowledge while sidestepping the pitfalls of. Explore emotional prompts and expertprompting to. As a user of these generative models, we can reduce the hallucinatory or confabulatory responses by writing better prompts, i.e., hallucination resistant prompts. They work by guiding the ai’s. This article delves into six prompting techniques that can help reduce ai hallucination,. As a user of these generative models, we can reduce the hallucinatory or confabulatory responses by writing better prompts, i.e., hallucination resistant prompts. Explore emotional prompts and expertprompting to. Based around the idea of grounding the model to a trusted datasource. They work by guiding the ai’s reasoning process, ensuring that outputs are accurate, logically consistent, and grounded in reliable. Here are three templates you can use on the prompt level to reduce them. When researchers tested the method they. By adapting prompting techniques and carefully integrating external tools, developers can improve the. Dive into our blog for advanced strategies like thot, con, and cove to minimize hallucinations in rag applications. The first step in minimizing ai hallucination is. To harness the potential of ai effectively, it is crucial to mitigate hallucinations. Mastering prompt engineering translates to businesses being able to fully harness ai’s capabilities, reaping the benefits of its vast knowledge while sidestepping the pitfalls of. Eliminating hallucinations entirely would imply creating an information black hole—a system where infinite information can be stored within a finite model and retrieved. Here are three templates you can use on the prompt level to reduce them. “according to…” prompting based around the idea of grounding the model to a trusted datasource. Here are some examples of possible.Improve Accuracy and Reduce Hallucinations with a Simple Prompting

Best Practices for GPT Hallucinations Prevention

A simple prompting technique to reduce hallucinations when using

Prompt Engineering Method to Reduce AI Hallucinations Kata.ai's Blog!

RAG LLM Prompting Techniques to Reduce Hallucinations Galileo AI

Leveraging Hallucinations to Reduce Manual Prompt Dependency in

AI hallucination Complete guide to detection and prevention

Prompt engineering methods that reduce hallucinations

RAG LLM Prompting Techniques to Reduce Hallucinations Galileo AI

Improve Accuracy and Reduce Hallucinations with a Simple Prompting

Provide Clear And Specific Prompts.

They Work By Guiding The Ai’s Reasoning.

Fortunately, There Are Techniques You Can Use To Get More Reliable Output From An Ai Model.

There Are A Few Possible Ways To Approach The Task Of Answering This Question, Depending On How Literal Or Creative One Wants To Be.

Related Post: